Evaluations

Evaluations enable you to get a wholistic view of your search quality across many queries. You can spot blind spots, find opportunities to improve, and get a quantitative understanding of how search is performing for your customers.

Evaluating relevance

In Evaluations we evaluate search results on their relevance to the query. This is done by taking the content of the result (e.g. the product) and comparing it to the intent of the query. Results are judged on a 3 point scale:

- 🟢 GREAT - perfectly relevant result

- 🟡 OK - partially relevant

- 🔴 BAD - irrelevant

In order to evaluate results we support using an LLM-as-a-judge. This enables rapid iteration, evaluating hundreds of results in seconds.

Other forms of evaluation

Today we focus on evaluating search results on their relevance to the query. There are many other ways to evaluate quality. For example, using extrinsic metrics such as CTR or engagement.

We support evaluating on these metrics, if this is something you're interested in then please get in touch.

Common evaluation strategies

There are multiple strategies that can be used for evaluating search. The methods described below revolve around selecting different queries to evaluate on such that you can understand your quality on those queries.

It is common for these strategies to be combined. For example, you may evaluate both on the HEAD traffic (most popular), and on queries that generate the most revenue.

Traffic based analysis

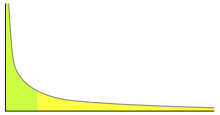

HEAD

These are the most popular queries by frequency. Typically these make up 33% of the traffic distribution by volume.

Random sample

Using a random sample of traffic is a good way to get a wholistic view of the overall search experience. If you were to randomly select a user, what would their search experience be?

Torso and Tail

Torso and Tail refer to the middle and last 33% of the traffic distribution by volume. Typically these queries are more unique, but make up a large portion of the total traffic. Solving these queries well can lead to great gains in aggregate performance.

Use case based analysis

It's common to focus on specific use cases when evaluating quality. Example use cases you may focus on are specific user patterns and important product or business use cases. I've listed a few examples below.

Categories

Evaluate search on categorical searches, such as "shirts", "pants", "shoes", "mens", "womens".

Top KPI drivers

Evaluate the searches that lead to the most revenue or other KPIs.

Comparing Indexes

We offer several tools for comparing results between indexes and configurations. See comparing results